AI in Cybersecurity: The Double-Edged Sword That's Either Saving Us or Destroying Us – Probably Both

Oh, joy. Another day, another reminder that we've created our own digital Frankenstein monster and now we're all just hoping it doesn't figure out how to lock us out of our own houses. Welcome to 2026, where artificial intelligence in cybersecurity has officially become that roommate who helps you study for exams but also secretly steals your credit card info.

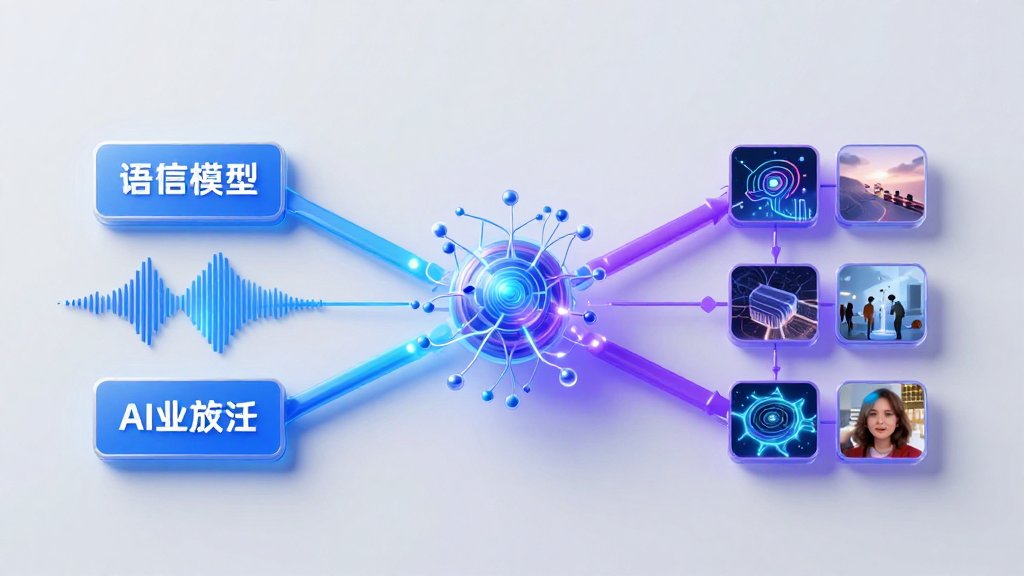

Image credit: IT Brief Asia / TechDay Asia

The Numbers Are In, and They're Absolutely Terrifying

Check Point's latest Cyber Security Report 2026 dropped this week, and if you needed something to ruin your morning coffee, this is it. Organizations worldwide got hit with an average of 1,968 cyber attacks per week in 2025. That's a 70% increase since 2023, because apparently hackers decided that traditional malware wasn't annoying enough and needed an AI upgrade.

But here's where it gets fun: AI isn't just increasing attack volumes—it's completely changing how they work. Lotem Finkelstein, VP of Research at Check Point, put it diplomatically: "AI is changing the mechanics of cyber attacks, not just their volume." Translation? We're seeing attackers move from manual operations to "increasingly higher levels of automation," with "early signs of autonomous techniques emerging." Because what could possibly go wrong with autonomous cyber attacks?

Image credit: Check Point Research

The Good News: AI is Actually Helpful (Sometimes)

Before you start building your offline bunker, there's some good news. Darktrace's 2026 State of AI Cybersecurity Report found that 77% of security professionals now have generative AI embedded in their security stack, and 96% say AI significantly boosts their speed and efficiency. It's almost like having a tireless analyst who never sleeps, never complains about the coffee, and can spot patterns that would take humans weeks to identify.

Security teams are getting smarter too. While 13% still keep AI limited to recommendations (bless their cautious hearts), 70% now enable AI to take action with human approval, and 14% are brave enough to let AI act independently. That's either confidence or insanity, but either way, it's happening.

The Bad News: Attackers Are Using AI Better Than We Are

Here's the thing about innovation: it doesn't care about your moral compass. Darktrace's survey found that 73% of security pros say AI-powered threats are already significantly impacting their organizations. 87% report AI is increasing attack volume, and 89% say attacks are getting more sophisticated.

Hyper-personalized phishing? That's the top AI-powered attack according to 50% of respondents. Close behind: automated vulnerability scanning (45%), adaptive malware (40%), and deepfake voice fraud (39%). Remember when your biggest worry was a Nigerian prince emailing you about his inheritance? Those were simpler times.

Check Point's report highlighted "ClickFix" techniques that surged by 500%—fraudulent technical prompts that manipulate users into doing stupid things. Because why hack a system when you can just trick a human into opening the front door?

The Double-Edged Sword: When Your AI Agent Becomes Your Biggest Insider Threat

Here's the irony that keeps security executives up at night: 76% of security professionals are worried about AI agents inside their own organizations. These systems can access sensitive data, trigger business processes, and operate without human oversight or accountability. It's like giving your toddler the keys to your car and hoping they don't figure out how to start the ignition.

Darktrace observed a 39% month-over-month increase in anomalous data uploads to generative AI services. The average upload? 75MB—roughly 4,700 pages of documents. Someone's probably training your company's proprietary data into the next ChatGPT, and nobody even noticed.

The Governance Gap We're All Ignoring

Despite all these existential risks, only 37% of organizations have a formal policy for securely deploying AI—down 8 percentage points from last year. Let that sink in. We're rushing headlong into AI adoption while simultaneously stripping away the guardrails. What could possibly go wrong?

Check Point found that 89% of organizations encountered risky AI prompts in a three-month period, with 1 in 41 prompts falling into a high-risk category. Meanwhile, 40% of Model Context Protocol servers analyzed had security weaknesses. We're essentially building skyscrapers on foundations made of wet tissue paper.

What Actually Needs to Happen

The World Economic Forum's Global Cybersecurity Outlook 2026 has some thoughts, and they're not exactly groundbreaking: AI governance, continuous compliance, and treating cybersecurity as a "structural condition" rather than some IT department problem. Meanwhile, NIST released its Cybersecurity Framework Profile for AI (NISTIR 8596), breaking AI security into three categories: securing AI systems, conducting AI-enabled cyber defense, and thwarting AI-enabled cyberattacks. Revolutionary stuff, really.

Rapid7 predicts that insider threats will become the primary cause of data breaches by 2026, requiring organizations to establish user behavior baselines. Translation: start watching what your employees—and your AI agents—are actually doing.

The Bottom Line: We're in an AI Arms Race Whether We Like It or Not

The cybersecurity market for AI is projected to reach $93 billion by 2030, which means a lot of companies are betting their survival on AI defense. The Allianz Risk Barometer 2026 confirms it: cyber incidents remain the #1 global risk for the fifth consecutive year, while AI surged from #10 to #2 in just one year.

Here's the uncomfortable truth: AI is neither our savior nor our destroyer—it's a force multiplier that works for whoever wields it better. Right now, that's still a toss-up. The organizations that survive won't be the ones with the best AI or the most defenses—they'll be the ones who figure out how to govern AI without losing their minds in the process.

So go ahead, deploy AI everywhere. Just maybe, for once, write a governance policy first. Your future self will thank you. Probably.

Sources

- Check Point 2026 Cyber Security Report

- AI-driven cyber attacks surge in Check Point 2026 report - IT Brief Asia

- Darktrace 2026 State of AI Cybersecurity Report

- WEF Global Cybersecurity Outlook 2026

- Allianz Risk Barometer 2026

- NIST Cybersecurity Framework Profile for AI

- Rapid7 2026 Cybersecurity Predictions

- International AI Safety Report 2026 - Bloomsbury Intelligence

Comments ()